In among the many numerous bulletins at its 2024 Associate Summit immediately, together with new AR glasses, an up to date UI, and different options, Snapchat has additionally introduced that customers will quickly be capable to create quick video clips within the app, based mostly on textual content prompts.

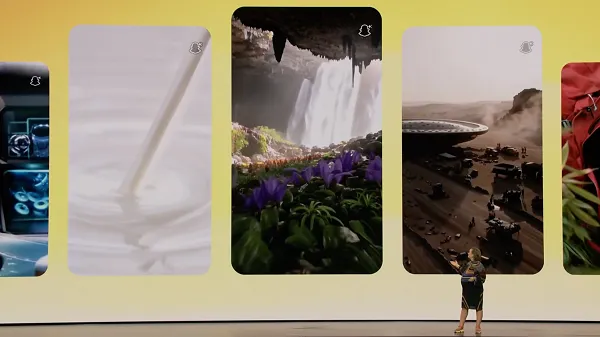

As you may see on this instance, Snap is near launching a brand new function that may be capable to generate quick video clips, based mostly on no matter textual content enter you select.

So, as per this instance, you possibly can enter in “rubber duck floating” and the system will be capable to generate that as a video clip, whereas there’s additionally a “Type” choice to assist refine and customise your video as you like.

Snap says that the system may even, ultimately, be capable to animate photographs as properly, which is able to considerably develop the capability of its present AI choices.

The truth is, it goes additional than the AI processes supplied by each Meta and TikTok as properly. Each Meta and ByteDance do have their very own, working text-to-video fashions, however they’re not accessible of their respective apps as but.

Although Snap’s isn’t both. Snap says that its AI video generator might be made accessible to a small subset of creators in beta from this week, nevertheless it nonetheless has some approach to go earlier than it’s prepared for a broader launch.

So in some methods, Snap’s beating the others to the punch, however then once more, both Meta or TikTok might greenlight their very own variations and instantly match Snap on this respect.

Movies generated by the software will include a Snap AI watermark (you may see the Snapchat+ icon within the prime proper of the examples proven within the presentation), whereas Snap’s additionally present process improvement work to make sure that a few of the extra questionable makes use of of generative AI aren’t accessible within the software.

Snapchat additionally introduced numerous different AI instruments to help creators, together with its GenAI suite for Lens Studio, which is able to facilitate text-to-AR object creation, simplifying the method.

It’s additionally including animation instruments based mostly on the identical logic, so you may convey Bitmoji to life inside your AR experiences, with all of those choices using AI to streamline and enhance Snap’s numerous artistic processes.

Although AI video nonetheless appears bizarre, and actually, not overly conducive to what Snap’s historically been about, in sharing your private, actual life experiences with pals.

Do you actually wish to be producing hyper-real AI movies to share within the app? Is that going to boost or detract from the Snap expertise?

I get why social platforms are going this route, as they attempt to experience the AI wave, and maximize engagement, whereas additionally justifying their funding in AI instruments. However I don’t know that social apps, that are constructed upon a basis of human, social experiences, actually profit from AI generated content material. Which isn’t actual, by no means occurred, and doesn’t depict anyone’s precise lived expertise.

Possibly I’m lacking the purpose, and there’s little doubt that the technological development of such instruments is wonderful. However I simply don’t see it being an enormous deal to Snapchat customers. A novelty, positive, however a permanent, partaking function? In all probability not.

Both approach, Snap, once more, is seeking to hitch its wagon to the AI hype prepare, to be able to sustain with the competitors, and if it has the capability to allow this, why not, I assume.

It’s nonetheless a approach off a correct launch, nevertheless it appears to be like to be coming someday quickly.