Revelations that OpenAI secretly funded and had entry to the FrontierMath benchmarking dataset are elevating issues about whether or not it was used to coach its reasoning o3 AI reasoning mannequin, and the validity of the mannequin’s excessive scores.

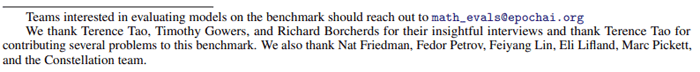

Along with accessing the benchmarking dataset, OpenAI funded its creation, a proven fact that was withheld from the mathematicians who contributed to creating FrontierMath. Epoch AI belatedly disclosed OpenAI’s funding solely within the ultimate paper printed on Arxiv.org, which introduced the benchmark. Earlier variations of the paper omitted any point out of OpenAI’s involvement.

Screenshot Of FrontierMath Paper

Closeup Of Acknowledgement

Earlier Model Of Paper That Lacked Acknowledgement

OpenAI 03 Mannequin Scored Extremely On FrontierMath Benchmark

The information of OpenAI’s secret involvement are elevating questions concerning the excessive scores achieved by the o3 reasoning AI mannequin and inflicting disappointment with the FrontierMath challenge. Epoch AI responded with transparency about what occurred and what they’re doing to test if the o3 mannequin was educated with the FrontierMath dataset.

Giving OpenAI entry to the dataset was sudden as a result of the entire level of it’s to check AI fashions however that may’t be accomplished if the fashions know the questions and solutions beforehand.

A put up within the r/singularity subreddit expressed this disappointment and cited a doc that claimed that the mathematicians didn’t learn about OpenAI’s involvement:

“Frontier Math, the current cutting-edge math benchmark, is funded by OpenAI. OpenAI allegedly has entry to the issues and options. That is disappointing as a result of the benchmark was offered to the general public as a way to judge frontier fashions, with help from famend mathematicians. In actuality, Epoch AI is constructing datasets for OpenAI. They by no means disclosed any ties with OpenAI earlier than.”

The Reddit dialogue cited a publication that exposed OpenAI’s deeper involvement:

“The mathematicians creating the issues for FrontierMath weren’t (actively)[2] communicated to about funding from OpenAI.

…Now Epoch AI or OpenAI don’t say publicly that OpenAI has entry to the workouts or solutions or options. I’ve heard second-hand that OpenAI does have entry to workouts and solutions and that they use them for validation.”

Tamay Besiroglu (LinkedIn Profile), related director at Epoch AI, acknowledged that OpenAI had entry to the datasets but additionally asserted that there was a “holdout” dataset that OpenAI didn’t have entry to.

He wrote within the cited doc:

“Tamay from Epoch AI right here.

We made a mistake in not being extra clear about OpenAI’s involvement. We have been restricted from disclosing the partnership till across the time o3 launched, and in hindsight we must always have negotiated tougher for the power to be clear to the benchmark contributors as quickly as attainable. Our contract particularly prevented us from disclosing details about the funding supply and the truth that OpenAI has knowledge entry to a lot however not the entire dataset. We personal this error and are dedicated to doing higher sooner or later.

Concerning coaching utilization: We acknowledge that OpenAI does have entry to a big fraction of FrontierMath issues and options, aside from a unseen-by-OpenAI hold-out set that allows us to independently confirm mannequin capabilities. Nevertheless, we now have a verbal settlement that these supplies is not going to be utilized in mannequin coaching.

OpenAI has additionally been totally supportive of our determination to keep up a separate, unseen holdout set—an additional safeguard to stop overfitting and guarantee correct progress measurement. From day one, FrontierMath was conceived and introduced as an analysis software, and we consider these preparations mirror that goal. “

Extra Info About OpenAI & FrontierMath Revealed

Elliot Glazer (LinkedIn profile/Reddit profile), the lead mathematician at Epoch AI confirmed that OpenAI has the dataset and that they have been allowed to make use of it to judge OpenAI’s o3 giant language mannequin, which is their subsequent state-of-the-art AI that’s known as a reasoning AI mannequin. He provided his opinion that the excessive scores obtained by the o3 mannequin are “legit” and that Epoch AI is conducting an unbiased analysis to find out whether or not or not o3 had entry to the FrontierMath dataset for coaching, which might solid the mannequin’s excessive scores in a special gentle.

He wrote:

“Epoch’s lead mathematician right here. Sure, OAI funded this and has the dataset, which allowed them to judge o3 in-house. We haven’t but independently verified their 25% declare. To take action, we’re at the moment creating a hold-out dataset and can have the ability to check their mannequin with out them having any prior publicity to those issues.

My private opinion is that OAI’s rating is legit (i.e., they didn’t prepare on the dataset), and that they haven’t any incentive to lie about inner benchmarking performances. Nevertheless, we will’t vouch for them till our unbiased analysis is full.”

Glazer had additionally shared that Epoch AI was going to check o3 utilizing a “holdout” dataset that OpenAI didn’t have entry to, saying:

“We’re going to judge o3 with OAI having zero prior publicity to the holdout issues. This can be hermetic.”

One other put up on Reddit by Glazer described how the “holdout set” was created:

“We’ll describe the method extra clearly when the holdout set eval is definitely accomplished, however we’re selecting the holdout issues at random from a bigger set which can be added to FrontierMath. The manufacturing course of is in any other case similar to the way it’s at all times been.”

Ready For Solutions

That’s the place the drama stands till the Epoch AI analysis is accomplished which can point out whether or not or not OpenAI had educated their AI reasoning mannequin with the dataset or solely used it for benchmarking it.

Featured Picture by Shutterstock/Antonello Marangi