Google has made a change to the way it’s search outcomes are served which may even assist to safe it towards bots and scrapers. Whether or not it will have additional impact on website positioning Instruments or if they’ll use a headless Chrome that makes use of JavaScript stays an open query in the intervening time nevertheless it’s possible that Google is utilizing price limiting to throttle what number of pages could be requested inside a set time frame.

Google Search Now Requires JavaScript

Google quietly up to date their search field to require all customers, together with bots, to have JavaScript turned on when looking out.

Browsing Google Search with out JavaScript turned on ends in the next message:

Activate JavaScript to maintain looking out

The browser you’re utilizing has JavaScript turned off. To proceed your search, flip it on.

Screenshot Of Google Search JavaScript Message

In an electronic mail to TechCrunch a Google spokesperson shared the next particulars:

“Enabling JavaScript permits us to raised shield our companies and customers from bots and evolving types of abuse and spam, …and to supply essentially the most related and up-to-date data.”

JavaScript presumably permits personalization within the search expertise, which is what that spokesperson might imply by offering essentially the most related data. However JavaScript can be used for blocking bots.

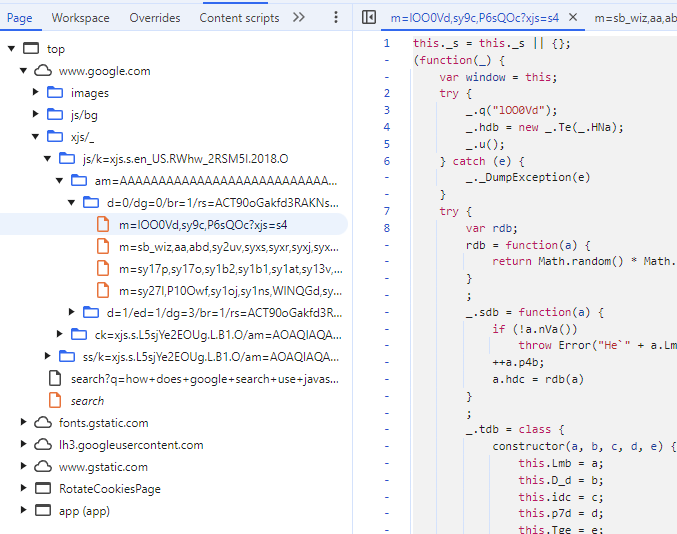

Utilizing the most recent model of Chrome I copied a number of the JavaScript and ran it by means of ChatGPT to ask what it does. One a part of it might relate to proscribing abusive requests for paperwork.

Screenshot Of Chrome Dev Instruments

ChatGPT gave me the next suggestions:

“Core Functionalities

Randomized Worth Technology (rdb)Generates a random worth primarily based on properties (D_d, idc, and p4b) of the enter object a, constrained by p7d.

This can be used for rate-limiting, exponential backoff, or related logic.Goal and Context

From its elements, the script:Doubtless handles request retries or entry management for net sources.

Implements a coverage enforcement system, the place:

Insurance policies decide if requests are legitimate.

Errors are logged and typically retried primarily based on guidelines.

Randomized delays or limits would possibly management the retry mechanism.

Seems optimized for error dealing with and resilience in distributed or high-traffic techniques, presumably inside a Google service or API.”

ChatGPT mentioned that the code might use rate-limiting which is a strategy to restrict the variety of actions a person or a system can take inside a particular time interval.

Fee-Limiting:

Used to implement a restrict on the variety of actions (e.g., API requests) a person or system can carry out inside a particular timeframe.

On this code, the random values generated by rdb could possibly be used to introduce variability in when or how usually requests are allowed, serving to to handle site visitors successfully.

Exponential Backoff:

ChatGPT defined that exponential backoff is a strategy to restrict the quantity of retries for a failed motion a person or system is allowed to make. The time interval between retries for a failed motion will increase exponentially.

Comparable Logic:

ChatGPT defined that random worth technology could possibly be used to handle entry to sources to stop abusive requests.

I don’t know for sure that that is what that particular JavaScript is doing, that’s what ChatGPT defined and it undoubtedly matches the data that Google shared that they’re utilizing JavaScript as a part of their technique for blocking bots.