The FTC has had a major victory towards misleading practices by social media apps, albeit through a smaller participant within the house.

Immediately, the FTC has introduced that personal messaging app NGL, which grew to become a success with teen customers again in 2022, shall be fined $5 million, and be banned from permitting individuals underneath 18 to make use of the app in any respect, resulting from deceptive approaches and regulatory violations.

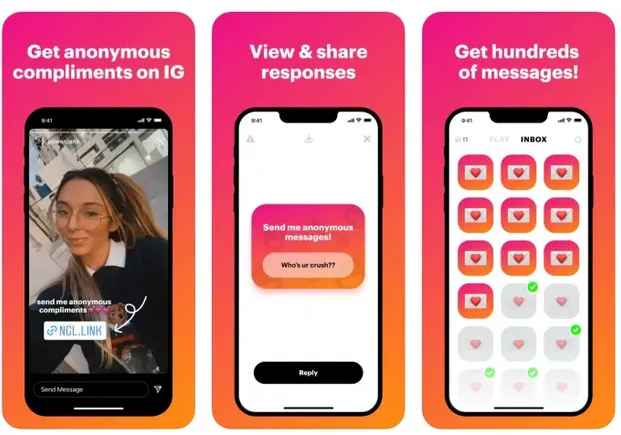

NGL’s key worth proposition is that it allows customers to submit nameless replies to questions posed by customers of the app. Customers can share their NGL questions on IG and Snapchat, prompting recipients to submit their responses through the NGL platform. Customers are then capable of view these responses, with out data on who despatched them. In the event that they wish to know who really despatched every message, nevertheless, they will pay a month-to-month subscription charge for full performance.

The FTC discovered that NGL had acted deceptively, in a number of methods, first by simulating responses when actual people didn’t reply.

As per the FTC:

“A lot of these nameless messages that customers had been informed got here from individuals they knew – for instance, “one in all your mates is hiding s[o]mething from u” – had been really fakes despatched by the corporate itself in an effort to induce further gross sales of the NGL Professional subscription to individuals desperate to study the id of who had despatched the message.”

So when you paid, you had been solely revealing {that a} bot had despatched you a message.

The FTC additionally alleges that NGL’s UI didn’t clearly state that its prices for revealing a sender’s id had been a recurring charge, versus a one-off value.

However much more concerningly, the FTC discovered that NGL didn’t implement enough protections for teenagers, regardless of “touting “world class AI content material moderation” that enabled them to “filter out dangerous language and bullying.”

“The corporate’s a lot vaunted AI usually didn’t filter out dangerous language and bullying. It shouldn’t take synthetic intelligence to anticipate that teenagers hiding behind the cloak of anonymity would ship messages like “You’re ugly,” “You’re a loser,” “You’re fats,” and “Everybody hates you.” However a media outlet reported that the app didn’t display screen out hurtful (and all too predictable) messages of that kind.”

The FTC was notably pointed concerning the proclaimed use of AI to reassure customers (and oldsters):

“The defendants’ sadly named “Security Middle” precisely anticipated the apprehensions mother and father and educators would have concerning the app and tried to guarantee them with guarantees that AI would clear up the issue. Too many firms are exploiting the AI buzz du jour by making false or misleading claims about their supposed use of synthetic intelligence. AI-related claims aren’t puffery. They’re goal representations topic to the FTC ‘s long-standing substantiation doctrine.”

It’s the primary time that the FTC has applied a full ban on children utilizing a messaging app, and it may assist it set up new precedent round teen security measures throughout the business.

The FTC can also be trying to implement expanded restrictions on how Meta makes use of teen person information, whereas it’s additionally searching for to ascertain extra definitive guidelines round advertisements focused at customers underneath 13.

Meta’s already implementing extra restrictions on this entrance, stemming each from EU regulation adjustments and proposals from the FTC. However the regulatory group is searching for extra concrete enforcement measures, together with business normal processes for verifying person ages.

Within the case of NGL, a few of these violations had been extra blatant, resulting in elevated scrutiny total. However the case does open up extra scope for expanded measures in different apps.

So when you might not use NGL, and should not have been uncovered to the app, the expanded ripple impact may nonetheless be felt.