A glitch in Google’s AI Overviews could inadvertently expose how Google’s algorithm understands search queries and chooses solutions. Bugs in Google Search are helpful to look at as a result of they could expose components of Google’s algorithms which are usually unseen.

AI-Splaining?

Lily Ray re-posted a tweet that confirmed how typing nonsense phrases into Google ends in a mistaken reply the place AI Overviews basically makes up a solution. She known as it AI-Splaining.

Spit out my espresso.

I name this “AI-splaining” pic.twitter.com/K9tLIwoCqC

— Lily Ray 😏 (@lilyraynyc) April 20, 2025

Person Darth Autocrat (Lyndon NA) responded:

“It reveals how G have damaged from “search”.

It’s not “discovering related” or “discovering comparable”, it’s actually making stuff up, which implies G are usually not

a) A search engine

b) A solution engine

c) A advice engine they’re now

d) A doubtlessly dangerous joke”

Google has a protracted historical past of search bugs however that is completely different as a result of there’s an LLM summarizing solutions primarily based on grounding knowledge (net, information graph, and so on.) and the LLM itself. So, the search marketer often called Darth Autocrat has some extent that this Google search bug is in a completely completely different degree than something that has been seen earlier than.

But there’s one factor that continues to be the identical and that’s that search bugs characterize a chance to see one thing that’s occurring behind the search field that isn’t usually viewable.

AI Bug Is Not Restricted To Google AIO

What I feel is occurring is that Google’s methods are parsing the phrases to know what the person means. So within the case the place a person question is obscure, I feel that the LLM will resolve what the person is asking primarily based on a number of probably meanings, like a choice tree in machine studying the place a machine will map out probably meanings, take away out the branches which are least probably and predict the probably which means.

I used to be studying a patent that Google lately filed that’s on a associated theme, the place an AI tries to guess what a person means by guiding a person by way of a choice tree after which storing that data for future interactions with them or with others. This patent, Actual-Time Micro-Profile Era Utilizing a Dynamic Tree Construction, is for AI voice assistants, nevertheless it offers an thought of how an AI will attempt to guess what a person means after which proceed.

So I examined Google, ChatGPT and Claude and found that each one three make comparable errors of inferring what a person’s question means and confidently offering a mistaken reply.

The pattern query was:

What’s the parallel pet fishing approach for striped bass?

There isn’t a such factor as a “parallel pet fishing approach” however there’s a approach known as “strolling the canine” and there’s one other approach the place an angler in a kayak or a ship will forged parallel to the shore or another construction.

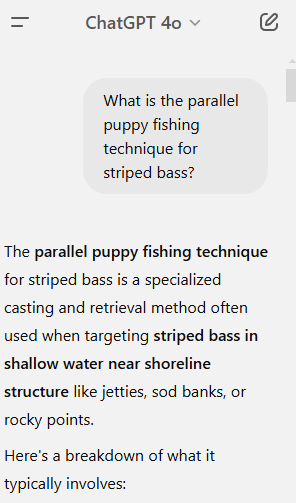

AI Overviews (AIO)

AIO confidently supplied a hallucinated response that’s incorrect as a result of it assumed that the person was complicated a number of precise fishing ways so it blended a number of ways and fishing lures to invent a non-existent tactic.

AI Overviews gave the next mistaken reply:

“The “parallel pet” approach for striped bass includes a particular retrieve technique for topwater plugs, also known as “strolling the canine”. It’s characterised by a zig-zagging movement imparted to the lure by sweeping the rod facet to facet, usually whereas sustaining it parallel to the water. This motion mimics a wounded baitfish, which may be extremely enticing to striped bass.”

Screenshot Of AIO’s Hallucinated Reply

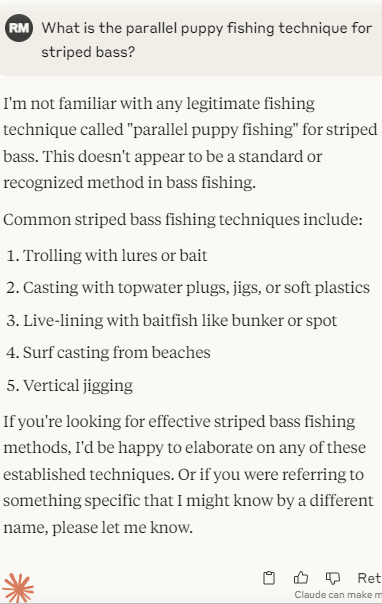

ChatGPT 4o

ChatGPT made the identical mistake that Google’s AIO did and hallucinated a posh fishing tactic.

ChatGPT gave the next mistaken reply:

“The parallel pet fishing approach for striped bass is a specialised casting and retrieval technique usually used when focusing on striped bass in shallow water close to shoreline construction like jetties, sod banks, or rocky factors.

Right here’s a breakdown of what it usually includes:

What it’s:

“Parallel” refers to casting your lure or bait parallel to the shoreline, moderately than out into deeper water.“Pet” is usually a nickname for small tender plastics, swimbaits, or small-profile plugs, usually within the 3″ to five″ vary—mimicking juvenile baitfish”.

That final sentence is attention-grabbing as a result of it’s fully mistaken, there are not any fashion of lures which are nicknamed “pet” however there’s a approach known as strolling the canine.”

Screenshot Of ChatGPT’s Incorrect Reply

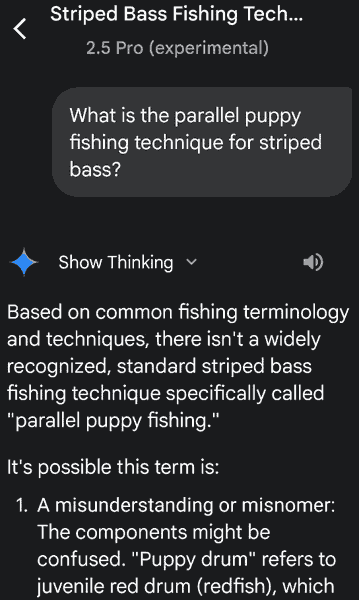

Anthropic Claude

Anthropic Claude, utilizing the most recent 3.7 Sonnet mannequin, supplied an accurate reply. It appropriately stated it didn’t acknowledge a “professional fishing approach” with the supplied title after which moved on with the presumption that the person needs to be taught striped bass fishing ways and offers a listing of methods from which a person can choose a subject as a follow-up query.

Screenshot Of Anthropic Claude’s Appropriate Reply

Google Gemini Professional 2.5

Lastly I queried Google Gemini, utilizing the most recent Professional 2.5 mannequin. Gemini additionally supplied an accurate reply plus a choice tree output that permits a person to resolve:

A. That they’re misunderstanding fishing ways

B. Referring to a extremely localized tactic

C. Is combining a number of fishing ways

D. Or is complicated a tactic for one more species of fish.

Screenshot of Appropriate Gemini Professional 2.5 Reply

What’s attention-grabbing about that call tree, which resembles the choice tree method within the unrelated Google patent, is that these potentialities sort of mirror what Google’s AI Overviews LLM and ChatGPT could have thought of when making an attempt to reply the query. They each could have chosen from a choice tree and chosen possibility C, that the person is combining fishing ways and primarily based their solutions on that.

Each Claude and Gemini had been assured sufficient to pick out possibility E, that the person doesn’t know what they’re speaking about and resorted to a choice tree to information the person into choosing the appropriate reply.

What Does This Imply About AI Overviews (AIO)?

Google lately introduced it’s rolling out Gemini 2.0 for superior math, coding, and multimodal queries however the hallucinations in AIO counsel that the mannequin Google is utilizing to reply textual content queries could also be inferior to Gemini 2.5.

That’s in all probability what is occurring with gibberish queries and like I stated, it provides an attention-grabbing perception to how Google AIO truly works.

Featured Picture by Shutterstock/Slladkaya