AI is shaping each subject by making abilities (akin to coding or information visualization) accessible to everybody, which weren’t obtainable previously.

An AI operator who can run the proper prompts can carry out low- and medium-level issue duties, permitting extra concentrate on strategic decision-making.

On this information, we’ll stroll you thru step-by-step the way to use AI chatbots with ChatGPT for example to run advanced BigQuery queries to your web optimization reporting wants.

We are going to evaluation two examples:

It’ll additionally provide you with an general concept of how you should use chatbots to cut back the burden when working web optimization reviews.

Why Do You Want To Be taught BigQuery?

web optimization instruments like Google Search Console or Google Analytics 4 have accessible consumer interfaces you should use to entry information. However typically, they restrict what you are able to do and present incomplete information, which is normally referred to as information sampling.

In GSC, this occurs as a result of the software omits anonymized queries and limits desk rows to as much as 1,000 rows.

Through the use of BigQuery, you may remedy that drawback and run any advanced reviews you need, eliminating the information sampling subject that happens very often when working with giant web sites.

(Alternatively, you might attempt utilizing Looker Studio, however the objective of this text is as an instance how one can function ChatGPT for BigQuery.)

For this text, we assume you could have already related your GSC and GA4 accounts to BigQuery. For those who haven’t completed it but, you might wish to examine our guides on the way to do it:

SQL Fundamentals

If you recognize Structured Question Language (SQL), you might skip this part. However for many who don’t, here’s a fast reference to SQL statements:

| Assertion | Description |

| SELECT | Retrieves information from tables |

| INSERT | Inserts new information right into a desk |

| UNNEST | Flattens an array right into a set of rows |

| UPDATE | Updates current information inside a desk |

| DELETE | Deletes information from a desk |

| CREATE | Creates a brand new desk or database |

| ALTER | Modifies an current desk |

| DROP | Deletes a desk or a database. |

The circumstances we might be utilizing so you may familiarize your self:

| Situation | Description |

| WHERE | Filters information for particular circumstances |

| AND | Combines two or extra circumstances the place all circumstances have to be true |

| OR | Combines two or extra circumstances the place at the very least one situation have to be true |

| NOT | Negates a situation |

| LIKE | Searches for a specified sample in a column. |

| IN | Checks if a price is inside a set of values |

| BETWEEN | Choose values inside a given vary |

| IS NULL | Checks for null values |

| IS NOT NULL | Checks for non-null values |

| EXISTS | Checks if a subquery returns any information |

Now, let’s dive into examples of how you should use BigQuery by way of ChatGPT.

1. How To Analyze Traffic Decline As a result of Of Google Algorithm Impression

When you’ve got been affected by a Google algorithm replace, the very first thing it is best to do is run reviews on affected pages and analyze why you could have been impacted.

Bear in mind, the worst factor you are able to do is begin altering one thing on the web site straight away in panic mode. This may occasionally trigger fluctuations in search site visitors and make analyzing the impression even tougher.

When you’ve got fewer pages within the index, you might discover utilizing GSC UI information passable for analyzing your information, however in case you have tens of 1000’s of pages, it gained’t allow you to export greater than 1,000 rows (both pages or queries) of knowledge.

Say you could have every week of knowledge because the algorithm replace has completed rolling out and wish to evaluate it with the earlier week’s information. To run that report in BigQuery, you might begin with this straightforward immediate:

Think about you're a information analyst skilled in Google Analytics 4 (GA4), Google Search Console, SQL, and BigQuery.

Your activity is to generate an SQL question to check 'WEB' Search Console information for the intervals '2024-05-08' to '2024-05-20' and '2024-04-18' to '2024-04-30'.

Extract the full clicks, impressions, and common place for every URL for every interval.

Moreover, calculate the variations in these metrics between the intervals for every URL

(the place common place ought to be calculated because the sum of positions divided by the sum of impressions).

Particulars:

BigQuery venture identify: use_your_bigquery_projectname

Dataset identify: searchconsole

Desk identify: searchdata_url_impression

Please present the SQL question that meets these necessities.

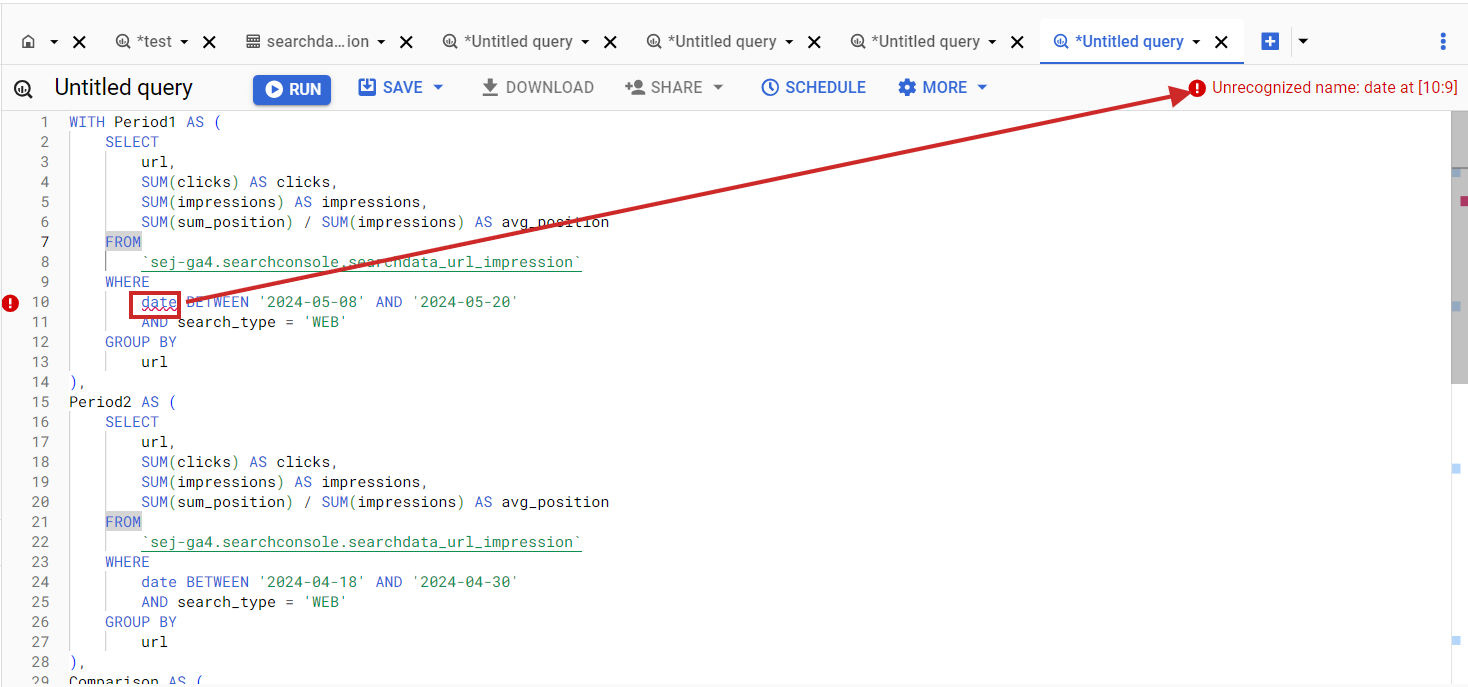

When you get an SQL code, copy and paste it into the BigQuery SQL editor, however I guess the preliminary code you’ll get can have errors. For instance, desk column names could not match what’s in your BigQuery dataset.

Error in BigQuery SQL when column identify doesn’t match the dataset column.

Error in BigQuery SQL when column identify doesn’t match the dataset column.Issues like this occur very often when performing coding duties by way of ChatGPT. Now, let’s dive into how one can shortly repair points like this.

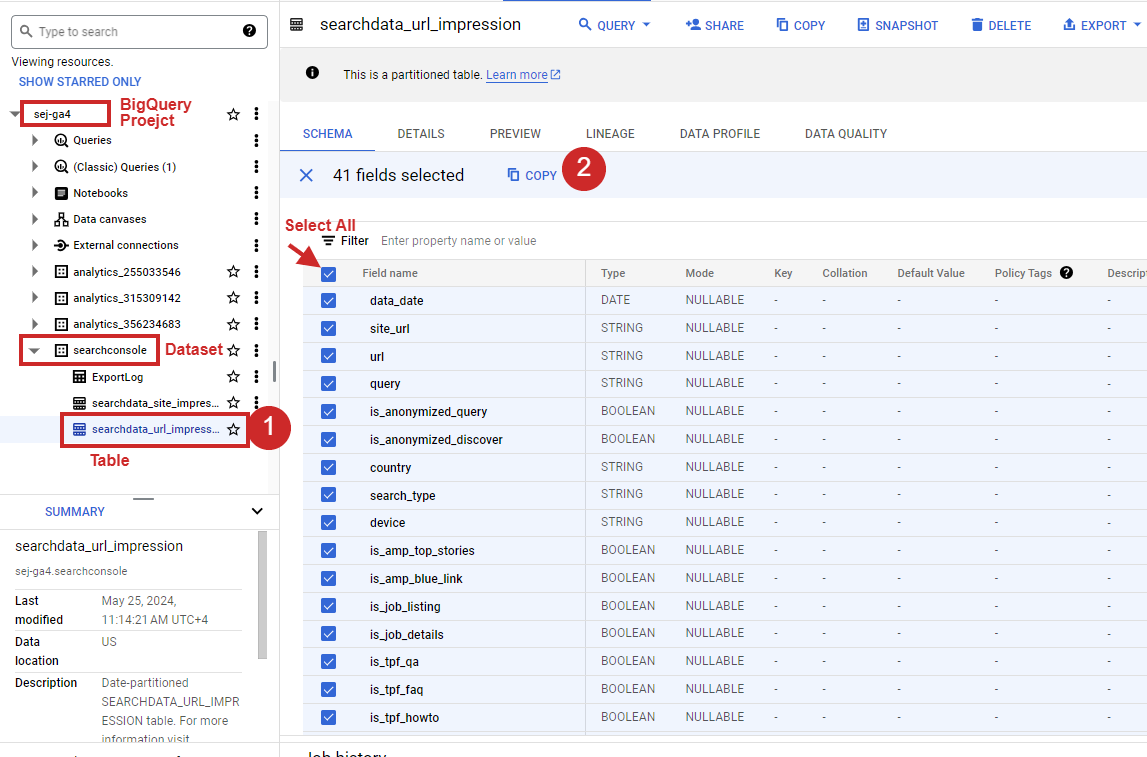

Merely click on in your dataset within the left-right panel, choose all columns on the proper aspect, and click on Copy as Desk.

The best way to choose all columns of the desk in BigQuery.

The best way to choose all columns of the desk in BigQuery.Upon getting it, simply copy and paste it as a follow-up immediate and hit enter.

Generate SQL once more by making an allowance for that my desk schema is as follows :

"fullname mode sort description

data_date DATE

site_url STRING

url STRING

question STRING

is_anonymized_query BOOLEAN

is_anonymized_discover BOOLEAN

nation STRING

search_type STRING

system STRING

is_amp_top_stories BOOLEAN

is_amp_blue_link BOOLEAN

is_job_listing BOOLEAN

is_job_details BOOLEAN

is_tpf_qa BOOLEAN

is_tpf_faq BOOLEAN

is_tpf_howto BOOLEAN

is_weblite BOOLEAN

is_action BOOLEAN

is_events_listing BOOLEAN

is_events_details BOOLEAN

is_search_appearance_android_app BOOLEAN

is_amp_story BOOLEAN

is_amp_image_result BOOLEAN

is_video BOOLEAN

is_organic_shopping BOOLEAN

is_review_snippet BOOLEAN

is_special_announcement BOOLEAN

is_recipe_feature BOOLEAN

is_recipe_rich_snippet BOOLEAN

is_subscribed_content BOOLEAN

is_page_experience BOOLEAN

is_practice_problems BOOLEAN

is_math_solvers BOOLEAN

is_translated_result BOOLEAN

is_edu_q_and_a BOOLEAN

impressions INTEGER

clicks INTEGER

sum_position INTEGER

is_product_snippets BOOLEAN

is_merchant_listings BOOLEAN

is_learning_videos BOOLEAN "This can repair the generated SQL in keeping with the GSC desk construction as follows:

WITH period1 AS (

SELECT

url,

SUM(clicks) AS clicks,

SUM(impressions) AS impressions,

IFNULL(SUM(sum_position) / NULLIF(SUM(impressions), 0), 0) AS avg_position

FROM

`use_your_bigquery_projectname.searchconsole.searchdata_url_impression`

WHERE

data_date BETWEEN '2024-05-08' AND '2024-05-20'

AND search_type="WEB"

GROUP BY

url

),

period2 AS (

SELECT

url,

SUM(clicks) AS clicks,

SUM(impressions) AS impressions,

IFNULL(SUM(sum_position) / NULLIF(SUM(impressions), 0), 0) AS avg_position

FROM

`use_your_bigquery_projectname.searchconsole.searchdata_url_impression`

WHERE

data_date BETWEEN '2024-04-18' AND '2024-04-30'

AND search_type="WEB"

GROUP BY

url

),

comparability AS (

SELECT

COALESCE(p1.url, p2.url) AS url,

IFNULL(p1.clicks, 0) AS clicks_period1,

IFNULL(p2.clicks, 0) AS clicks_period2,

IFNULL(p1.impressions, 0) AS impressions_period1,

IFNULL(p2.impressions, 0) AS impressions_period2,

IFNULL(p1.avg_position, 0) AS avg_position_period1,

IFNULL(p2.avg_position, 0) AS avg_position_period2,

IFNULL(p1.clicks, 0) - IFNULL(p2.clicks, 0) AS clicks_diff,

IFNULL(p1.impressions, 0) - IFNULL(p2.impressions, 0) AS impressions_diff,

IFNULL(p1.avg_position, 0) - IFNULL(p2.avg_position, 0) AS avg_position_diff

FROM

period1 p1

FULL OUTER JOIN

period2 p2

ON

p1.url = p2.url

)

SELECT

url,

clicks_period1,

clicks_period2,

clicks_diff,

impressions_period1,

impressions_period2,

impressions_diff,

avg_position_period1,

avg_position_period2,

avg_position_diff

FROM

comparability

ORDER BY

url;

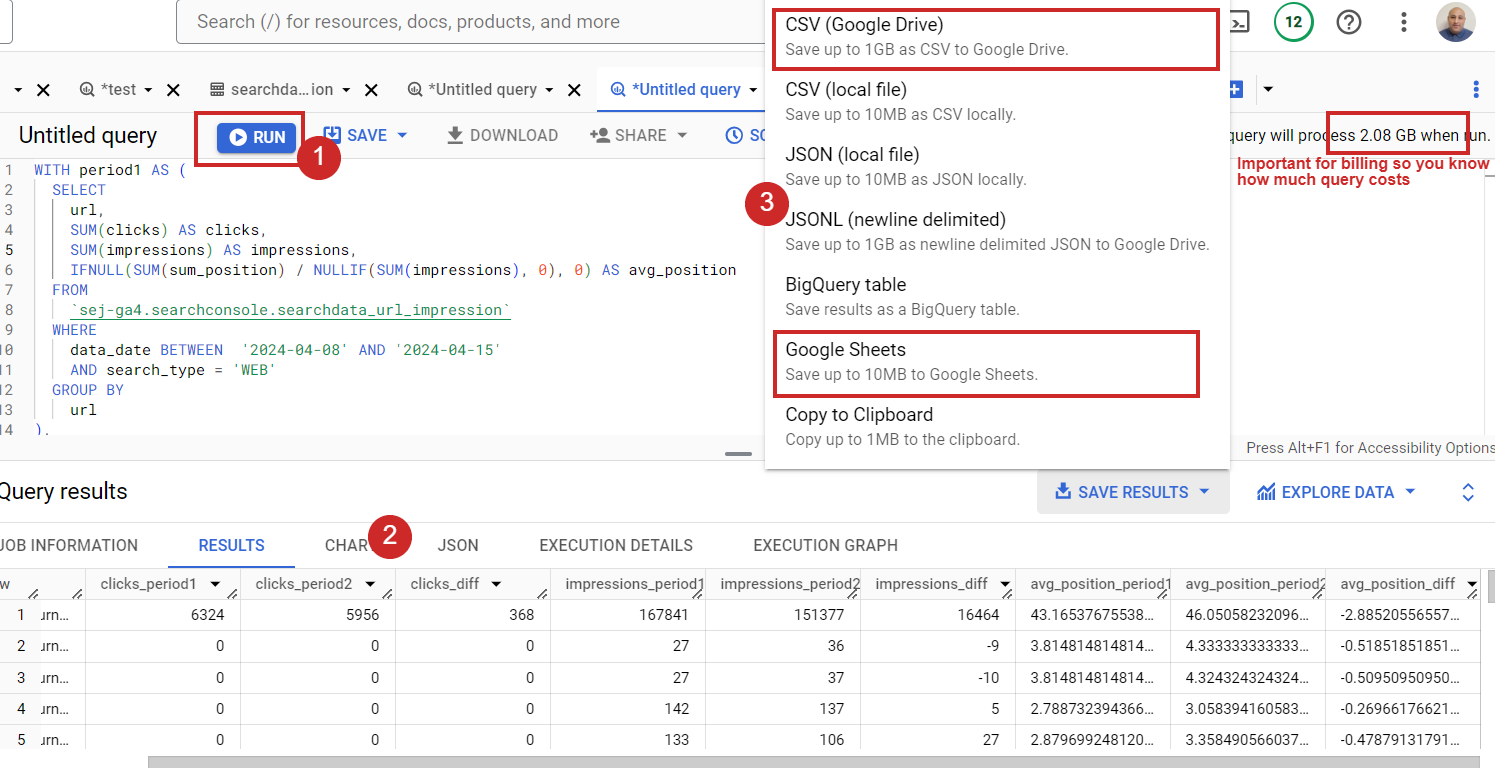

Now, run it and benefit from the information both by exporting it into the CSV or Google Sheets.

The best way to run SQL in BigQuery.

The best way to run SQL in BigQuery.In case you could have tens of millions of URLs, you might not be capable to work in Google Sheets or CSV export as a result of the information is simply too large. Plus, these apps have limitations on what number of rows you may have in a single doc. In that case, it can save you outcomes as a BigQuery desk and hook up with it with Looker Studio to view the information.

However please keep in mind that BigQuery is a freemium service. It’s free as much as 1 TB of processed question information a month. When you exceed that restrict, your bank card might be robotically charged primarily based in your utilization.

Which means if you happen to join your BigQuery to Looker Studio and browse your information there, it should depend in opposition to your billing each time you open your Looker dashboard.

That’s the reason, when exports have a couple of tens of 1000’s or lots of of 1000’s of rows, I like utilizing Google Sheets. I can simply join it to Looker Studio for information visualization and mixing, and this won’t depend in opposition to my billing.

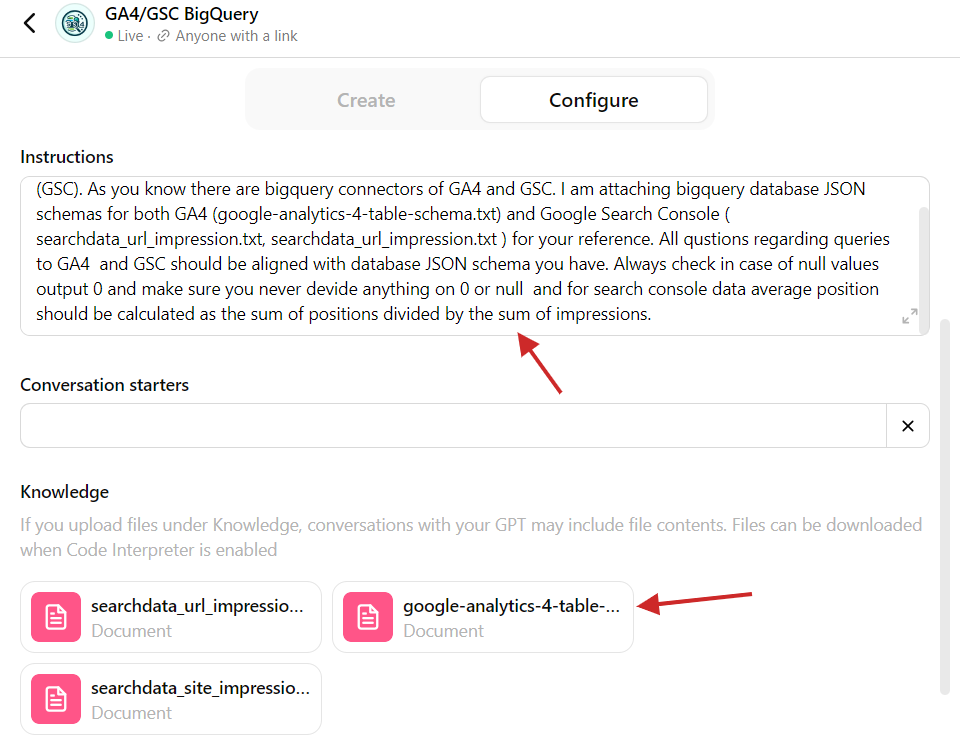

When you’ve got ChatGPT Plus, you may merely use this practice GPT I’ve made, which takes into consideration desk schemas for GA4 and Search Console. Within the above information, I assumed you had been utilizing the free model, and it illustrated how you should use ChatGPT general for working BigQuery.

In case you wish to know what’s in that customized GPT, right here is the screenshot of the backend.

Customized GPT with BigQuery desk schemas.

Customized GPT with BigQuery desk schemas.Nothing difficult – you simply want to repeat tables from BigQuery as JSON within the step defined above and add them into the customized GPT so it may well check with the desk construction. Moreover, there’s a immediate that asks GPT to check with the JSON recordsdata connected when composing queries.

That is one other illustration of how you should use ChatGPT to carry out duties extra successfully, eliminating repetitive duties.

If it’s essential work with one other dataset (completely different from GA4 or GSC) and also you don’t know SQL, you may add the desk schema from BigQuery into ChatGPT and compose SQLs particular to that desk construction. Straightforward, isn’t it?

As homework, I recommend you analyze which queries have been affected by AI Overviews.

There isn’t any differentiator within the Google Search Console desk to try this, however you may run a question to see which pages didn’t lose rating however had a big CTR drop after Might 14, 2024, when Google launched AI Overviews.

You possibly can evaluate the two-week interval after Might 14th with the 2 weeks prior. There may be nonetheless a chance that the CTR drop occurred due to different search options, like a competitor getting a Featured Snippet, however it is best to discover sufficient legitimate instances the place your clicks had been affected by AI Overviews (previously Search Generative Expertise or “SGE”).

2. How To Mix Search Site visitors Knowledge With Engagement Metrics From GA4

When analyzing search site visitors, it’s vital to grasp how a lot customers interact with content material as a result of consumer engagement alerts are rating components. Please be aware that I don’t imply the precise metrics outlined in GA4.

Nonetheless, GA4’s engagement metrics – akin to “common engagement time per session,” which is the typical time your web site was in focus in a consumer’s browser – could trace at whether or not your articles are adequate for customers to learn.

Whether it is too low, it means your weblog pages could have a difficulty, and customers don’t learn them.

For those who mix that metric with Search Console information, you might discover that pages with low rankings even have a low common engagement time per session.

Please be aware that GA4 and GSC have completely different sourcattribution fashions. GA4 makes use of last-click attribution mannequin, which suggests if one visits from Google to an article web page as soon as after which comes again straight two extra instances, GA4 could attribute all three visits to Google, whereas GSC will report just one.

So, it’s not 100% correct and will not be appropriate for company reporting, however having engagement metrics from GA4 alongside GSC information offers beneficial data to research your rankings’ correlations with engagement.

Utilizing ChatGPT with BigQuery requires just a little preparation. Earlier than we soar into the immediate, I recommend you learn how GA4 tables are structured, as it’s not so simple as GSC’s tables.

It has an event_params column, which has a file sort and incorporates dimensions like page_location, ga_session_id, and engagement_time_msec. It tracks how lengthy a consumer actively engages together with your web site.

event_params key engagement_time_msec is just not the full time on the positioning however the time spent on particular interactions (like clicking or scrolling), when every interplay provides a brand new piece of engagement time. It’s like including up all of the little moments when customers are actively utilizing your web site or app.

Subsequently, if we sum that metric and common it throughout periods for the pages, we get hold of the typical engagement time per session.

Now, when you perceive engagement_time_msec , let’s ask ChatGPT to assist us assemble a question that pulls GA4 “common engagement time per session” for every URL and combines it with GSC search efficiency information of articles.

The immediate I might use is:

Think about you're a information analyst skilled in Google Analytics 4 (GA4), Google Search Console, SQL, and BigQuery.

Compose a SQL question that pulls the next information from Google Search Console for every URL for the earlier 7 days, excluding the present day:

1. Clicks,

2. Impressions,

3. Common place (calculated because the sum of positions divided by the sum of impressions).

From GA4 BigQuery desk unnest from event_params ga_session_id, engagement_time_msec and page_location.

Choose solely rows which have engagement_time_msec set as not null group all periods with the identical IDs and page_location and SUM engagement_time_msec and devides to SUM of periods quantity

Be a part of GA4 and Google Search Console information by URLs for a similar interval.

Additonally Optimize the question to tug from GA4's desk partitions and never question your entire desk.

Particulars:

1. BigQuery venture identify: use_your_bigquery_projectname

2. GA4 dataset identify: use_your_ga4_bigquery_dataset_name

3. GA4 desk identify: use_your_ga4_bigquery_table_name_under_dataset

3. Google Search Console dataset identify: use_your_gsc_bigquery_table_name_under_dataset

3. Google Search Console desk identify: use_your_gsc_bigquery_table_name_under_dataset

4. Right here is BigQuery tables schemas for GA4: [copy table schema here]

5. Right here is BigQuery tables schemas for Google Search Console: [copy table schema here]

As soon as I copied and pasted into BigQuery, it gave me outcomes with “common engagement time per session” being all nulls. So, apparently, ChatGPT wants extra context and steering on how GA4 works.

I’ve helped to supply further data as a follow-up query from GA4’s official documentation on the way it calculates engagement_time_msec. I copied and pasted the doc into the follow-up immediate and requested to check with that data when composing the question, which helped. (For those who get any syntax error, simply copy/paste it as a follow-up query and ask to repair it.)

On account of quarter-hour of effort, I acquired the proper SQL:

WITH

-- Step 1: Filter GA4 information for the final 7 days and unnest event_params

ga4_data AS (

SELECT

event_date,

event_timestamp,

(SELECT worth.int_value FROM UNNEST(event_params) WHERE key = 'ga_session_id') AS ga_session_id,

(SELECT worth.int_value FROM UNNEST(event_params) WHERE key = 'engagement_time_msec') AS engagement_time_msec,

(SELECT worth.string_value FROM UNNEST(event_params) WHERE key = 'page_location') AS page_location

FROM

`your_bq_project_name.your_bq_ga4_dataset.events_*`

WHERE

_TABLE_SUFFIX BETWEEN FORMAT_DATE('%Ypercentmpercentd', DATE_SUB(CURRENT_DATE(), INTERVAL 8 DAY))

AND FORMAT_DATE('%Ypercentmpercentd', DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY))

AND (SELECT worth.int_value FROM UNNEST(event_params) WHERE key = 'engagement_time_msec') IS NOT NULL

),

-- Step 2: Mixture GA4 information by session ID and web page location

aggregated_ga4_data AS (

SELECT

page_location,

SUM(engagement_time_msec/1000) / NULLIF(COUNT(DISTINCT ga_session_id), 0) AS avg_engagement_time_msec

FROM

ga4_data

GROUP BY

page_location

),

-- Step 3: Filter GSC information for the final 7 days and choose urls which had clicks

gsc_data AS (

SELECT

url,

SUM(clicks) AS clicks,

SUM(impressions) AS impressions,

SUM(sum_position) / SUM(impressions) AS avg_position

FROM

`your_bq_project_name.searchconsole.searchdata_url_impression`

WHERE

data_date BETWEEN DATE_SUB(CURRENT_DATE(), INTERVAL 8 DAY) AND DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY)

and

clicks > 0

GROUP BY

url

)

-- Becoming a member of Google Search Console information with GA4 information by page_location and url

SELECT

gsc.url,

gsc.clicks,

gsc.impressions,

gsc.avg_position,

ga4.avg_engagement_time_msec

FROM

gsc_data AS gsc

LEFT JOIN

aggregated_ga4_data AS ga4

ON

gsc.url = ga4.page_location

ORDER BY

gsc.clicks DESC;

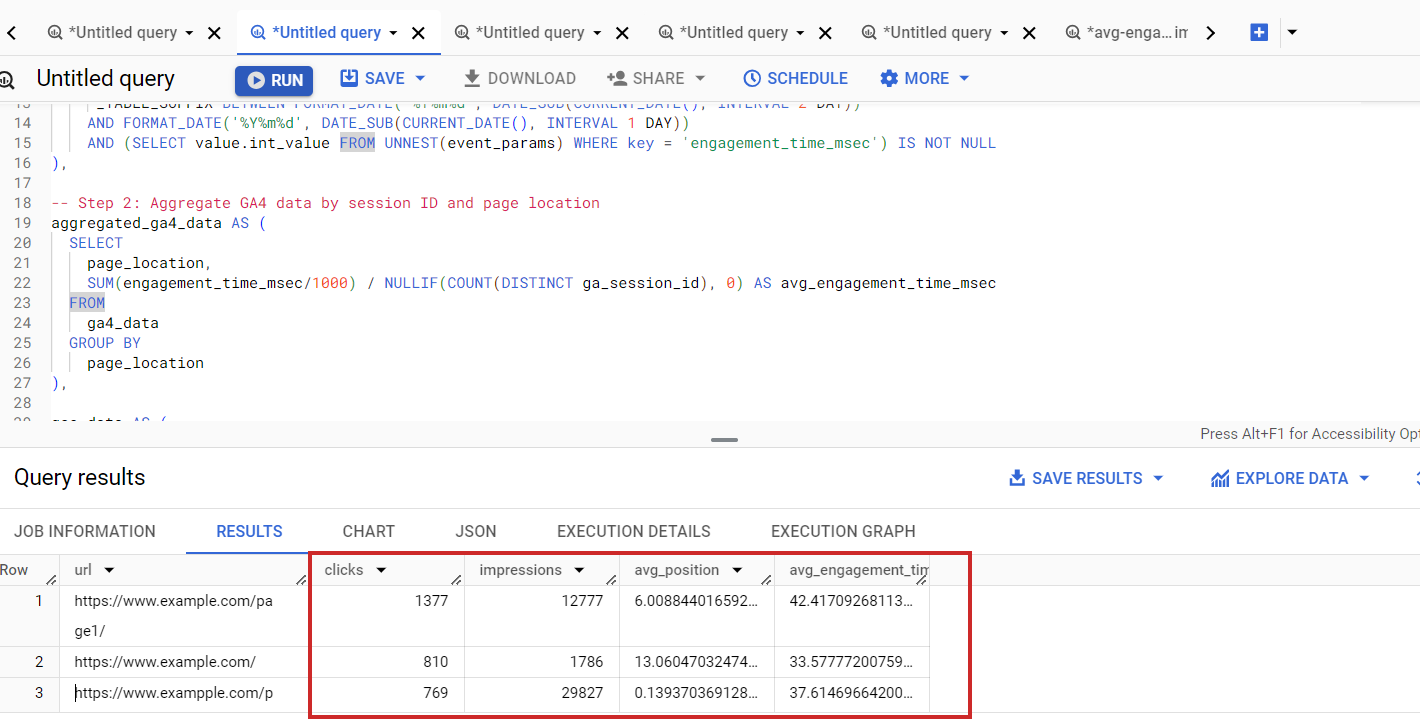

This pulls GSC information with engagement metrics from GA4.

Search Console mixed information with GA4

Search Console mixed information with GA4Please be aware that you just would possibly discover discrepancies between the numbers within the GA4 UI and the information queried from BigQuery tables.

This occurs as a result of GA4 focuses on “Lively Customers” and teams uncommon information factors into an “(different)” class, whereas BigQuery exhibits all uncooked information. GA4 additionally makes use of modeled information for gaps when consent isn’t given, which BigQuery doesn’t embrace.

Moreover, GA4 could pattern information for faster reviews, whereas BigQuery contains all information. These variations imply GA4 presents a fast overview, whereas BigQuery offers detailed evaluation. Be taught a extra detailed clarification of why this occurs on this article.

Maybe you might attempt modifying queries to incorporate solely lively customers to convey outcomes one step nearer to GA4 UI.

Alternatively, you should use Looker Studio to mix information, however it has limitations with very giant datasets. BigQuery presents scalability by processing terabytes of knowledge effectively, making it supreme for large-scale web optimization reviews and detailed analyses.

Its superior SQL capabilities enable advanced queries for deeper insights that Looker Studio or different dashboarding instruments can not match.

Conclusion

Utilizing ChatGPT’s coding skills to compose BigQuery queries to your reporting wants elevates you and opens new horizons the place you may mix a number of sources of knowledge.

This demonstrates how ChatGPT can streamline advanced information evaluation duties, enabling you to concentrate on strategic decision-making.

On the similar time, these examples taught us that people completely must function AI chatbots as a result of they could hallucinate or produce incorrect solutions.

Extra sources:

Featured Picture: NicoElNino/Shutterstock