Crawl price range is an important website positioning idea for big web sites with thousands and thousands of pages or medium-sized web sites with a couple of thousand pages that change every day.

An instance of an internet site with thousands and thousands of pages could be eBay.com, and web sites with tens of hundreds of pages that replace steadily could be consumer opinions and score web sites much like Gamespot.com.

There are such a lot of duties and points an website positioning knowledgeable has to think about that crawling is commonly placed on the again burner.

However crawl price range can and must be optimized.

On this article, you’ll be taught:

- The right way to enhance your crawl price range alongside the way in which.

- Go over the adjustments to crawl price range as an idea within the final couple of years.

(Be aware: When you have an internet site with only a few hundred pages, and pages usually are not listed, we advocate studying our article on frequent points inflicting indexing issues, as it’s definitely not due to crawl price range.)

What Is Crawl Price range?

Crawl price range refers back to the variety of pages that search engine crawlers (i.e., spiders and bots) go to inside a sure timeframe.

There are particular issues that go into crawl price range, reminiscent of a tentative steadiness between Googlebot’s makes an attempt to not overload your server and Google’s total want to crawl your area.

Crawl price range optimization is a collection of steps you’ll be able to take to extend effectivity and the speed at which serps’ bots go to your pages.

Why Is Crawl Price range Optimization Vital?

Crawling is step one to showing in search. With out being crawled, new pages and web page updates gained’t be added to look engine indexes.

The extra typically that crawlers go to your pages, the faster updates and new pages seem within the index. Consequently, your optimization efforts will take much less time to take maintain and begin affecting your rankings.

Google’s index comprises lots of of billions of pages and is rising every day. It prices serps to crawl every URL, and with the rising variety of web sites, they wish to scale back computational and storage prices by lowering the crawl price and indexation of URLs.

There may be additionally a rising urgency to scale back carbon emissions for local weather change, and Google has a long-term technique to enhance sustainability and scale back carbon emissions.

These priorities might make it tough for web sites to be crawled successfully sooner or later. Whereas crawl price range isn’t one thing you must fear about with small web sites with a couple of hundred pages, useful resource administration turns into an vital concern for enormous web sites. Optimizing crawl price range means having Google crawl your web site by spending as few sources as potential.

So, let’s talk about how one can optimize your crawl price range in right this moment’s world.

1. Disallow Crawling Of Motion URLs In Robots.Txt

Chances are you’ll be shocked, however Google has confirmed that disallowing URLs won’t have an effect on your crawl price range. This means Google will nonetheless crawl your web site on the identical price. So why will we talk about it right here?

Effectively, in case you disallow URLs that aren’t vital, you principally inform Google to crawl helpful components of your web site at the next price.

For instance, in case your web site has an inside search function with question parameters like /?q=google, Google will crawl these URLs if they’re linked from someplace.

Equally, in an e-commerce web site, you might need side filters producing URLs like /?colour=purple&dimension=s.

These question string parameters can create an infinite variety of distinctive URL combos that Google might attempt to crawl.

These URLs principally don’t have distinctive content material and simply filter the info you might have, which is nice for consumer expertise however not for Googlebot.

Permitting Google to crawl these URLs wastes crawl price range and impacts your web site’s total crawlability. By blocking them through robots.txt guidelines, Google will focus its crawl efforts on extra helpful pages in your web site.

Right here is the way to block inside search, aspects, or any URLs containing question strings through robots.txt:

Disallow: *?*s=*

Disallow: *?*colour=*

Disallow: *?*dimension=*

Every rule disallows any URL containing the respective question parameter, no matter different parameters that could be current.

- * (asterisk) matches any sequence of characters (together with none).

- ? (Query Mark): Signifies the start of a question string.

- =*: Matches the = signal and any subsequent characters.

This method helps keep away from redundancy and ensures that URLs with these particular question parameters are blocked from being crawled by serps.

Be aware, nevertheless, that this methodology ensures any URLs containing the indicated characters will likely be disallowed regardless of the place the characters seem. This may result in unintended disallows. For instance, question parameters containing a single character will disallow any URLs containing that character no matter the place it seems. Should you disallow ‘s’, URLs containing ‘/?pages=2’ will likely be blocked as a result of *?*s= matches additionally ‘?pages=’. If you wish to disallow URLs with a selected single character, you need to use a mixture of guidelines:

Disallow: *?s=*

Disallow: *&s=*The important change is that there isn’t any asterisk ‘*’ between the ‘?’ and ‘s’ characters. This methodology lets you disallow particular precise ‘s’ parameters in URLs, however you’ll want so as to add every variation individually.

Apply these guidelines to your particular use instances for any URLs that don’t present distinctive content material. For instance, in case you might have wishlist buttons with “?add_to_wishlist=1” URLs, you must disallow them by the rule:

Disallow: /*?*add_to_wishlist=*It is a no-brainer and a pure first and most vital step beneficial by Google.

An instance beneath reveals how blocking these parameters helped to scale back the crawling of pages with question strings. Google was attempting to crawl tens of hundreds of URLs with completely different parameter values that didn’t make sense, resulting in non-existent pages.

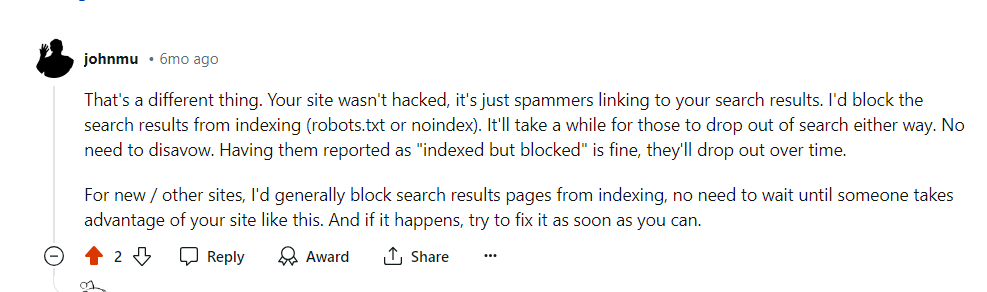

Nevertheless, generally disallowed URLs would possibly nonetheless be crawled and listed by serps. This will appear unusual, but it surely isn’t usually trigger for alarm. It often signifies that different web sites hyperlink to these URLs.

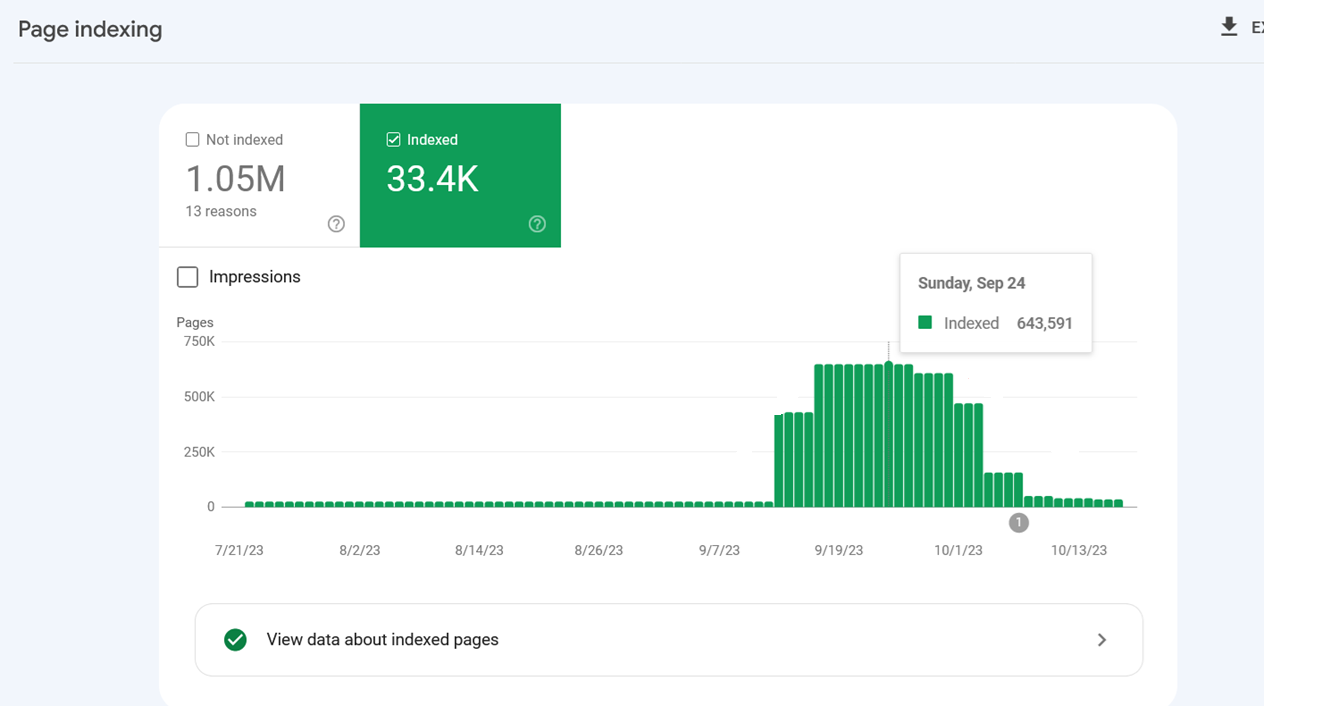

Indexing spiked as a result of Google listed inside search URLs after they have been blocked through robots.txt.

Indexing spiked as a result of Google listed inside search URLs after they have been blocked through robots.txt.Google confirmed that the crawling exercise will drop over time in these instances.

Google’s touch upon Reddit, July 2024

Google’s touch upon Reddit, July 2024One other vital advantage of blocking these URLs through robots.txt is saving your server sources. When a URL comprises parameters that point out the presence of dynamic content material, requests will go to the server as an alternative of the cache. This will increase the load in your server with each web page crawled.

Please keep in mind to not use “noindex meta tag” for blocking since Googlebot has to carry out a request to see the meta tag or HTTP response code, losing crawl price range.

1.2. Disallow Unimportant Useful resource URLs In Robots.txt

Apart from disallowing motion URLs, you could wish to disallow JavaScript recordsdata that aren’t a part of the web site structure or rendering.

For instance, in case you have JavaScript recordsdata answerable for opening pictures in a popup when customers click on, you’ll be able to disallow them in robots.txt so Google doesn’t waste price range crawling them.

Right here is an instance of the disallow rule of JavaScript file:

Disallow: /property/js/popup.js

Nevertheless, it’s best to by no means disallow sources which can be a part of rendering. For instance, in case your content material is dynamically loaded through JavaScript, Google must crawl the JS recordsdata to index the content material they load.

One other instance is REST API endpoints for type submissions. Say you might have a type with motion URL “/rest-api/form-submissions/”.

Doubtlessly, Google might crawl them. These URLs are by no means associated to rendering, and it might be good apply to dam them.

Disallow: /rest-api/form-submissions/

Nevertheless, headless CMSs typically use REST APIs to load content material dynamically, so ensure you don’t block these endpoints.

In a nutshell, take a look at no matter isn’t associated to rendering and block them.

2. Watch Out For Redirect Chains

Redirect chains happen when a number of URLs redirect to different URLs that additionally redirect. If this goes on for too lengthy, crawlers might abandon the chain earlier than reaching the ultimate vacation spot.

URL 1 redirects to URL 2, which directs to URL 3, and so forth. Chains may also take the type of infinite loops when URLs redirect to at least one one other.

Avoiding these is a commonsense method to web site well being.

Ideally, you’ll be capable to keep away from having even a single redirect chain in your complete area.

However it could be an unimaginable job for a big web site – 301 and 302 redirects are sure to seem, and you may’t repair redirects from inbound backlinks merely since you don’t have management over exterior web sites.

One or two redirects right here and there may not harm a lot, however lengthy chains and loops can turn out to be problematic.

With a view to troubleshoot redirect chains you need to use one of many website positioning instruments like Screaming Frog, Lumar, or Oncrawl to search out chains.

Whenever you uncover a sequence, the easiest way to repair it’s to take away all of the URLs between the primary web page and the ultimate web page. When you have a sequence that passes by means of seven pages, then redirect the primary URL on to the seventh.

One other nice method to scale back redirect chains is to interchange inside URLs that redirect with last locations in your CMS.

Relying in your CMS, there could also be completely different options in place; for instance, you need to use this plugin for WordPress. When you have a unique CMS, you could want to make use of a customized answer or ask your dev workforce to do it.

3. Use Server Facet Rendering (HTML) Each time Attainable

Now, if we’re speaking about Google, its crawler makes use of the most recent model of Chrome and is ready to see content material loaded by JavaScript simply positive.

However let’s assume critically. What does that imply? Googlebot crawls a web page and sources reminiscent of JavaScript then spends extra computational sources to render them.

Keep in mind, computational prices are vital for Google, and it needs to scale back them as a lot as potential.

So why render content material through JavaScript (shopper facet) and add further computational value for Google to crawl your pages?

Due to that, every time potential, it’s best to keep on with HTML.

That method, you’re not hurting your probabilities with any crawler.

4. Enhance Web page Velocity

As we mentioned above, Googlebot crawls and renders pages with JavaScript, which implies if it spends fewer sources to render webpages, the better it will likely be for it to crawl, which is dependent upon how effectively optimized your web site pace is.

Google says:

Google’s crawling is restricted by bandwidth, time, and availability of Googlebot cases. In case your server responds to requests faster, we’d be capable to crawl extra pages in your web site.

So utilizing server-side rendering is already an amazing step in the direction of enhancing web page pace, however you must be certain that your Core Net Important metrics are optimized, particularly server response time.

5. Take Care of Your Inner Hyperlinks

Google crawls URLs which can be on the web page, and at all times take into account that completely different URLs are counted by crawlers as separate pages.

When you have an internet site with the ‘www’ model, be certain that your inside URLs, particularly on navigation, level to the canonical model, i.e. with the ‘www’ model and vice versa.

One other frequent mistake is lacking a trailing slash. In case your URLs have a trailing slash on the finish, be certain that your inside URLs even have it.

In any other case, pointless redirects, for instance, “https://www.instance.com/sample-page” to “https://www.instance.com/sample-page/” will end in two crawls per URL.

One other vital side is to keep away from damaged inside hyperlinks pages, which may eat your crawl price range and smooth 404 pages.

And if that wasn’t dangerous sufficient, additionally they harm your consumer expertise!

On this case, once more, I’m in favor of utilizing a device for web site audit.

WebSite Auditor, Screaming Frog, Lumar or Oncrawl, and SE Rating are examples of nice instruments for an internet site audit.

6. Replace Your Sitemap

As soon as once more, it’s an actual win-win to handle your XML sitemap.

The bots may have a a lot better and simpler time understanding the place the interior hyperlinks lead.

Use solely the URLs which can be canonical on your sitemap.

Additionally, ensure that it corresponds to the most recent uploaded model of robots.txt and masses quick.

7. Implement 304 Standing Code

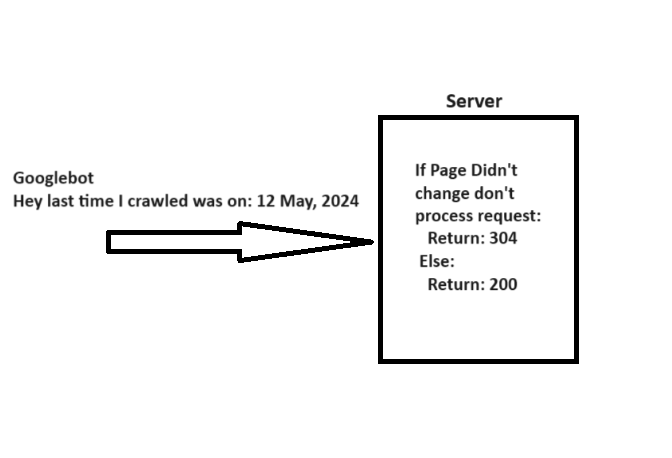

When crawling a URL, Googlebot sends a date through the “If-Modified-Since” header, which is extra details about the final time it crawled the given URL.

In case your webpage hasn’t modified since then (laid out in “If-Modified-Since“), you could return the “304 Not Modified” standing code with no response physique. This tells serps that webpage content material didn’t change, and Googlebot can use the model from the final go to it has on the file.

A easy rationalization of how 304 not modified http standing code works.

A easy rationalization of how 304 not modified http standing code works.Think about what number of server sources it can save you whereas serving to Googlebot save sources when you might have thousands and thousands of webpages. Fairly massive, isn’t it?

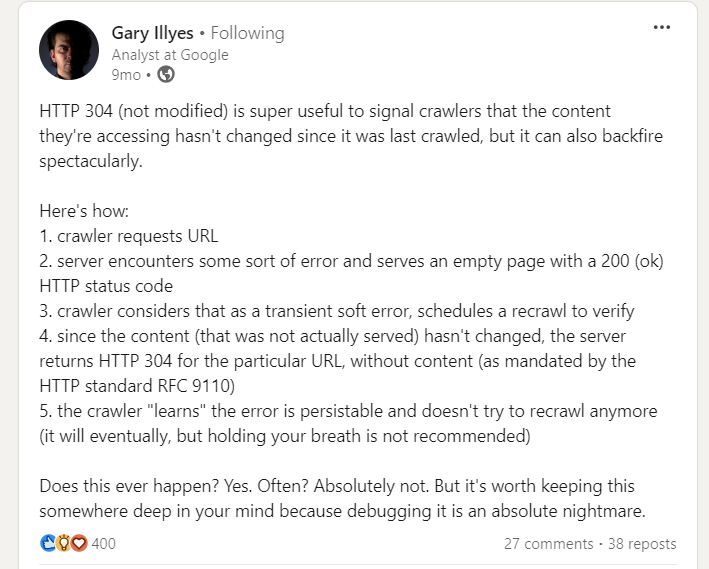

Nevertheless, there’s a caveat when implementing 304 standing code, identified by Gary Illyes.

Gary Illes on LinkedIn

Gary Illes on LinkedInSo be cautious. Server errors serving empty pages with a 200 standing could cause crawlers to cease recrawling, resulting in long-lasting indexing points.

8. Hreflang Tags Are Important

With a view to analyze your localized pages, crawlers make use of hreflang tags. Try to be telling Google about localized variations of your pages as clearly as potential.

First off, use the <hyperlink rel="alternate" hreflang="lang_code" href="https://www.searchenginejournal.com/technical-seo/tips-to-optimize-crawl-budget-for-seo/url_of_page" /> in your web page’s header. The place “lang_code” is a code for a supported language.

You must use the <loc> aspect for any given URL. That method, you’ll be able to level to the localized variations of a web page.

Learn: 6 Frequent Hreflang Tag Errors Sabotaging Your Worldwide website positioning

9. Monitoring and Upkeep

Examine your server logs and Google Search Console’s Crawl Stats report to observe crawl anomalies and establish potential issues.

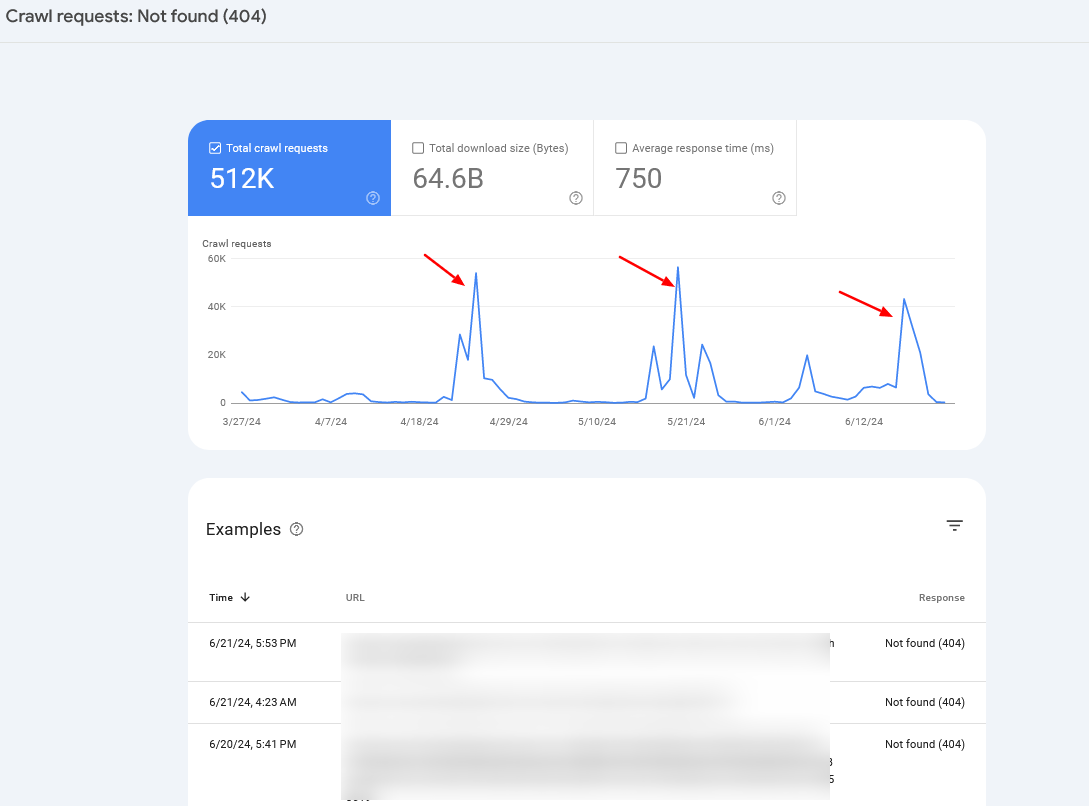

Should you discover periodic crawl spikes of 404 pages, in 99% of instances, it’s brought on by infinite crawl areas, which we’ve mentioned above, or signifies different issues your web site could also be experiencing.

Crawl price spikes

Crawl price spikesTypically, you could wish to mix server log info with Search Console information to establish the basis trigger.

Abstract

So, in case you have been questioning whether or not crawl price range optimization continues to be vital on your web site, the reply is clearly sure.

Crawl price range is, was, and doubtless will likely be an vital factor to remember for each website positioning skilled.

Hopefully, the following pointers will assist you optimize your crawl price range and enhance your website positioning efficiency – however keep in mind, getting your pages crawled doesn’t imply they are going to be listed.

In case you face indexation points, I recommend studying the next articles:

Featured Picture: BestForBest/Shutterstock

All screenshots taken by creator